As I’m fresh-out of Android development boards, I decided to try and repurpose a Raspberry Pi 4 for Android experimentation in preparation for when the next version of this book comes out: http://newandroidbook.com/. I had originally tried this with my Raspberry Pi 3A+, but only found real support for the 3B.

First, you need a version of Android that is customized for the Pi 4 . I used this repo and followed the guide: https://github.com/android-rpi/device_arpi_rpi4

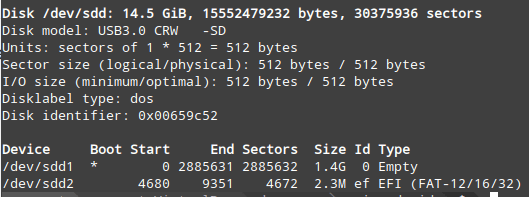

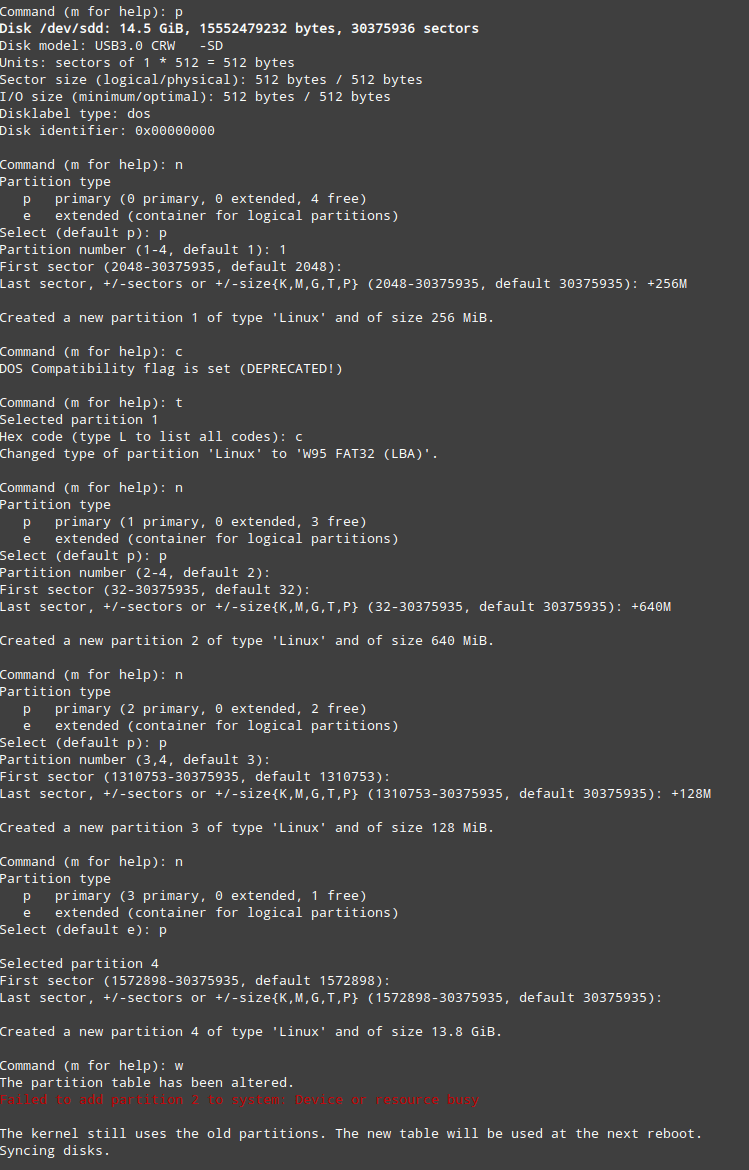

Note: when formatting the SDcard, this is how I did it. First, I listed all disks to find where my SDcard existed, which was /dev/sdd:

sudo fdisk -l

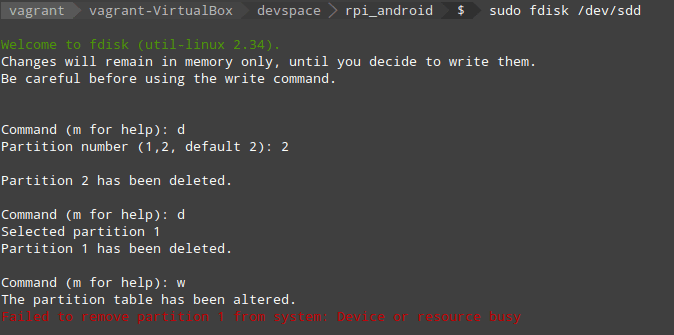

My disk was not empty, so I removed the existing partitions, using fdisk.

Now to create the partitions as specified by the guide, i.e:

# Prepare sd card Partitions of the card should be set-up like followings. p1 256MB for BOOT : Do fdisk : W95 FAT32(LBA) & Bootable, mkfs.vfat

p2 640MB for /system : Do fdisk, new primary partition

p3 128MB for /vendor : Do fdisk, new primary partition

p4 remaining space for /data : Do fdisk, mkfs.ext4

Set volume label for /data partition as userdata : use -L option of mkfs.ext4, e2label command, or -n option of mkfs.vfat

The fdisk command is again used to create the partitions:

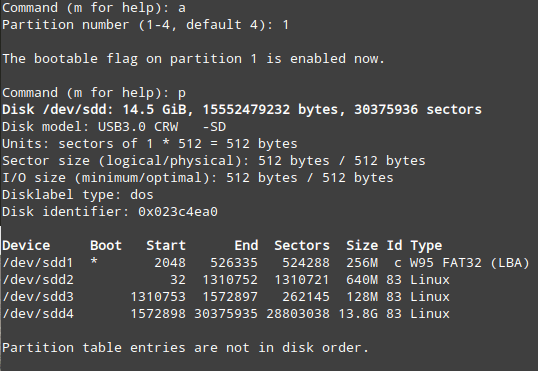

(Forgot to show making the /dev/sdd1 partition a boot partition)

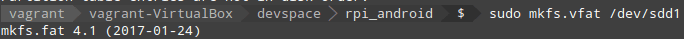

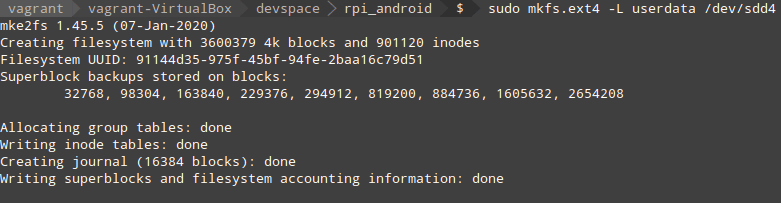

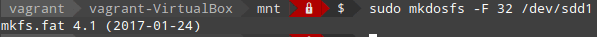

To set up the file systems correctly, I ejected/inserted the SDcard, and ran the following mkfs commands. The first makes /dev/sdd1 a vfat boot partition, and the second makes /dev/sdd4 an ext4 partition with label ‘userdata’.

I then formatted the DOS partition:

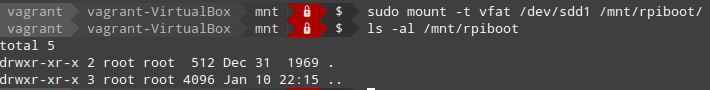

After formatting, I copied the system and vendor images across to their respective locations as shown in the guide. To copy files to the DOS partition, it had to be mounted (I made a directory /mnt/rpiboot):

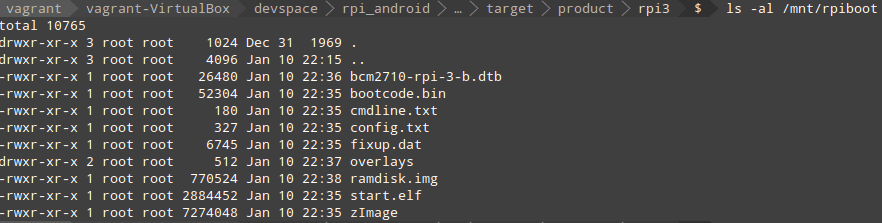

And then I followed all the commands for copying to the boot partition, the result of which looks like: